Updated: 2/8/17

So over the weekend I was labbing as per normal and I’ve been running into contention issues with my network and I think my tested and true Cisco 3750G’s are finally meeting their makers. The bonded Quad 1G connections over a vDS are starting to show slowness. I am kind of hammering the crap out of the lab. It gets very slow when I set DRS/SDRS to fully automatic and the whole backup storage goes to a crawl once those 1G links are saturated.

So I think I’m upgrading the Dell R610’s to 10G for ISCSI and LAN traffic.

I already have a Cisco 3750E as the core switch, and (4) Cisco 3750G’s in the rack and the (4) Cisco 3750E’s have just arrivedm just waiting for the 10G X2 cards to arrive. 😀

The additional Sonicwall TZ 210 arrived on Wednesday, snagged it for $50, I think I’ll use one of the 3750G slim (1U) as the DMZ switch.

I already have a QNAP TS-531X as my 10G-ready NAS, that is just being used at 1G on a dual bond. However I did kind of make an impulsive move with work and bought a TS-831X as-well as we get a steep QNAP discount at work. I think I might use the TS-531X as the new backup appliance and/or plex server.

Did some research and I think I’m going with these, as the most cost effective manner to go 10G:

- (10) X2-10G-SFP cards

- (3) Broadcom ® NetXtreme ® II 57711 DP 10G cards

- (4) Cisco Stackwise Cables – Have (2) already.

- (1) Raspberry Pi 3

- A few twinax cables

The plan, for now, is to go solo 10G connections for both iSCSI/vSAN and for LAN traffic on both of the 10G ports on the 57711’s. I’ll setup Network I/O in Enterprise Plus 6.5 to manage the traffic. The pre-existing Dual Quad nic’s will be setup on the Storage 3750E’s in a Quad LACP bond as a standby link in the event the 57771 goes offline and like-wise for the LAN traffic. Each of the Quad LACP links for LAN and Management will most likely also be split 2-n-2 per Quad Nic so there isn’t only a Quad LACP but the traffic is balanced 2-by-2 across both Quad Nic’s. I like redundancy.

As for the 10G links well maybe at a later date I’ll snag another 3750E and another 57771 per host and reconfigure traffic flows so storage will have redundant 10G.

This network and storage fabric will be in two different stacks and the network will be a collapsed core.

I feel that this is the ideal setup as I’m going more towards a VMware NSX setup and I’d like to make the physical networking fabric as simple and as fast as possible. 🙂

I think I’ll keep the 3750G and 2960G around, as they might come in handy later on. 😀

Lastly, I still have these two Brocade 6610’s I got off ebay last month, that I may just use them as the Storage switches, but what is sad is I still need one more 10G license for the 2nd 6610 and it’s $650 off ebay. It’s still cheaper @ $105 per for two more Cisco 3750E’s of ebay. So I’m perplexed. I’ll use these 6610’s for something, maybe use one as a overkill replacement to the DMZ 2960G. 😀

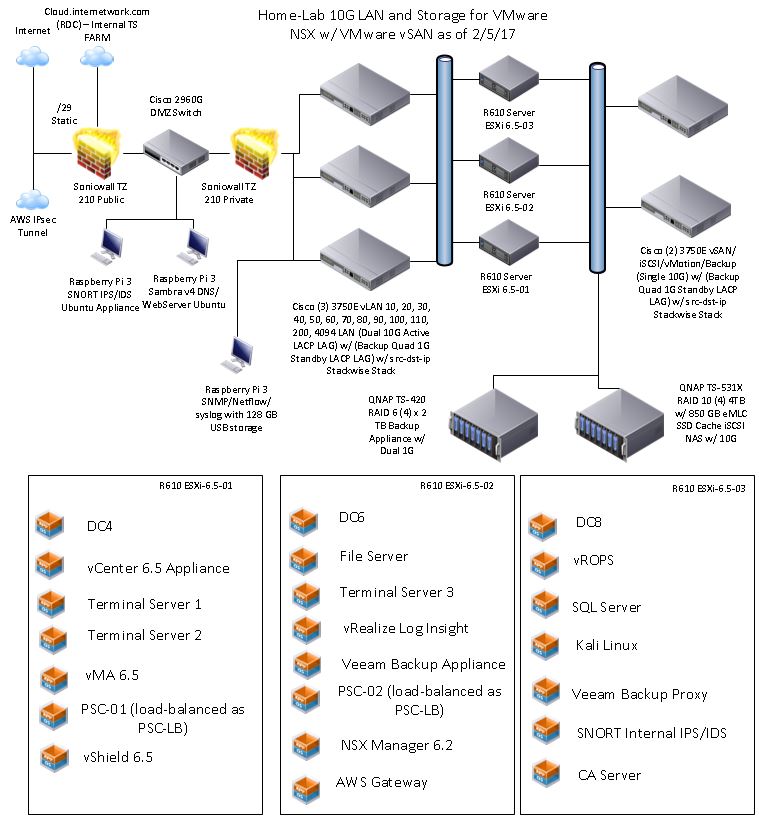

Here is the design idea. 🙂